Modular Missions

Bringing Embodied’s ‘Moxie’ into the GPT age

The Lowdown

At Embodied, one of the many projects I worked on while launching and supporting Moxie was taking point on the ai-powered revamp of Moxie’s core content, “Modular Missions”.

Moxie is a social-emotional learning robot for kids - or “mentors”, as we called them. We wanted the users, children aged six to ten, to feel like they were teaching Moxie to be a good friend to humans.

When designers built the core of the Moxie experience, our social-emotional-learning curriculum was split into groups of designer-written traditional conversation design flows called ‘mission sets’ about themes like Understanding Emotions, Personal Space, and Being Different. The human-authored missions were unique and lovingly hand-crafted (by me and other designers!) - but also highly time intensive to create and full of friction points. The “Modular Missions” revamp using ai prompts was meant to allow us to create mission sets on an exponentially wider range of themes, so mentor’s parents could select mission sets their child uniquely needed.

Check out Moxie’s feature in Time Magazine’s Best New Inventions of 2020!

TL;DR:

With “Modular Missions”, we created modular GPT prompts that could accept a few key variables - like mission theme and therapist-supplied learning objectives - and run a complete set of therapeutic activities that built on the child’s knowledge, reacted and remembered the child’s input.

In the following video, none of what Moxie says was written by a human.

The Process

Deconstruction

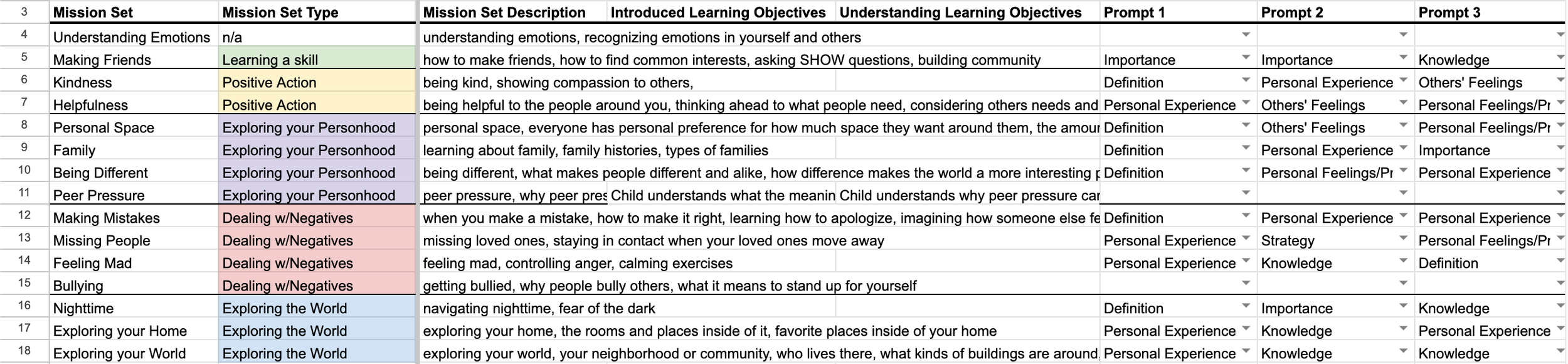

First, I deconstructed the authored content - I wanted to know what made our authored missions tick, so I could feed that to the prompt. I broke down the authored content into its very smallest elements: questions. I tracked what kinds of questions were asked when and for what type of mission themes in a multi-tab spreadsheet that filtered the information from detailed view to birds eye, so I could experiment with how much information to give the prompt.

Building

Chunking Prompts: Starting out with a prototype prompt, I built modular missions in a few nodes to make sure Moxie only ever had the information it really needed when it needed it (Embodied used a internal tool that looked a lot like Unreal Blueprints or Voiceflow). We used a spreadsheet that kept often-repeated pieces of prompt tucked away and standardized, so Moxie’s voice was preserved and successful pieces of prompt were applied across nodes.

Building “foresight”: It is a personal pet peeve of mine when someone believes GPT has anything near foresight or strategic thought. It doesn’t! However, therapists put a lot of thinking into how to lead a therapeutic conversation, and we wanted Moxie have some kind of strategy when it led our social-emotional learning conversations. In order for GPT to generate more accurate and strategic thought, designers have employed step by step prompting, asking GPT to outline its thinking step by step. Obviously, this isn’t something that Moxie can say out loud to its users. So we have to hide it!

I started using a strategy of step by step prompting combined with a “non-conversational node” that did a bit of conversation outlining before the conversation started. GPT was instructed to use the variables supplied (social-emotional learning goals, theme of the mission, etc) to outline the mission and store that in another variable. Then we used code to chop off the stuff we didn’t need in the output variable and supplied it as a piece of prompt in every other conversation node. Using this strategy, I was able to have Moxie’s conversation about the topic to build from lower level questions to higher level questions following a train of ‘thought’. This also kept Moxie from veering off-topic or asking the same questions over and over again.

Integrating memory: We encouraged Moxie to use its own background within conversation when it seemed relevant by using a Knowledge Base. Additionally, we made Moxie’s curiosity on the topic seem natural to its character by using a similar strategy as above. In the introduction, I noticed if I had gpt output the mission introduction line and the first mission question in order, both lines would be vague and disconnected. Instead, in a non-conversational node, I would have Moxie write the first question of the conversation, then write a backstory line related to the question, format it in a readable way, chop off the step-by-step output with some node magic, and then swap them - so it seemed like Moxie had a question in mind when it introduces the topic by relating it to a piece of Moxie’s own history!

Creating variety: By the end, I worked with another designer to create more than 7 distinct mission types that built from a basic understanding of the theme to a more in-depth understanding of the theme. Some of the missions were conversation-heavy, some of them were activities. For one of the types of activities, I designed a flow that would generate a relatable, age-appropriate scenario based on the theme with the GRL’s cast of characters - including a ‘letter’ where a character is asking for help with the sticky situation, take input from the mentor, and relay how the input affected the situation. This whole process required little to no designer writing - GPT would pick the characters and the scenario and write the letter itself.

Polishing

Across the board, I wrote tone wordage, guidance on transitional phrases, how to handle memories of previous conversations, and rules - lots of rules - on how to have a respectful, fun, compassionate conversation about the theme.

The Problem

Time Intensive

Before implementing Modular Missions, there were around 7-9 designer-authored ‘missions’ in a mission set, at 8 minutes of playable time each mission. They took around 4-6 weeks of completely dedicated time for a designer to complete and gain approvals from our therapeutic and curriculum experts before they could be delivered to QA - and then more time to stomp out bugs!

Friction

Users reported friction points that took them out of the experience - since designers had to anticipate what the mentors’ responses would be to questions that often prompted personal, specific responses, Moxie had a high chance of giving an in-specific “that’s interesting!” answer, or just completely misunderstanding the mentor. Every mentor got the same group of answers, Moxie couldn’t adapt to most specific input if the mentor brought up a specific anecdote of their life.

No Memory

With author-designed missions, Moxie didn’t have memory. Moxie couldn’t remember what you had told it in previous conversations - or even in the same conversation, a second before! Understandably, this was a bit of a bummer to the mentors when the relationship between Moxie and the mentor was meant to be a supportive friendship built on mutual learning. At least forgetful human friends have a notes app to jot down details about our friends that might slip our minds!

Only some of the magic that went into breaking down authored missions to use in the prompt

The Solution

After GPT hit the scene, I hit the ground running replacing designer-written missions with modular, flexible missions powered by GPT. While the missions wouldn’t be as unique as the designer-created ones, they were much more powerful when it came to scale, personalization, and memory integration.

Scale

The Modular Mission system could take input on new themes (mission theme and therapeutic goals from our therapist) and generate a mission set without designers having to hand-create it. This would allow us to generate mission sets on a lot of themes - so parents could choose which mission themes would be most pressing for their child.

Personalization

In the course of a mission, Moxie asks the mentors questions that will prompt in-depth, specific answers about the mentor’s life. Previously, we had to anticipate those answers and write authored responses for as many as we could reasonably accommodate. With GPT, Moxie’s responses to the mentor’s utterances could be much more precise for almost any input - given it didn’t hit the safety filters. We provided guidance on the tone and content of the answers in the prompt, so Moxie would still feel like Moxie.

Integrated Memory

Moxie could also remember what the mentor had previously told her, and incorporate those memories into later responses - later in the same conversation, and even in any mission later in the mission set!

Node flow for the first mission in the modular mission sets, “Concept Introduced”